Rising Amounts of Information

As we look at total amount of information created world wide, we see an exponentially rising curve. According to the 2017 Internet Trends study, the amount of total information generated in the world today is about 16 zettabytes (zb). Based on Kleiner estimates, this number will grow at least tenfold to over 160zb by 2025.

Where Will All this Information Come From?

There are two main drivers of this explosion: mobile devices and the IoT Revolution. Take a moment and think about how many sensors we have in our homes, cars and offices these days. If each of us have at least 10 sensors and 1 mobile phone (or more in the future), we can easily imagine a path to 10x data explosion over time.

Despite the inexorable march of data, most organizations struggle to manage these huge quantities of data today. Current technologies to manage data are buckling at the knees. However, companies are entrenched with these technologies and feel a vendor or technological lock-in; they are increasingly looking at an “open source first” policy as a way to avoid these vendor lock-in. These enterprises now rely on open-source technologies to run key segments of their infrastructure like big data and Hadoop, and many of these projects are going to be around for the long haul. IPOs of companies like Cloudera and Hortonworks show how important open-source is in today’s enterprise technology stack. You must check best data platform for customers.

Big Data Insights Into Real-time Actions

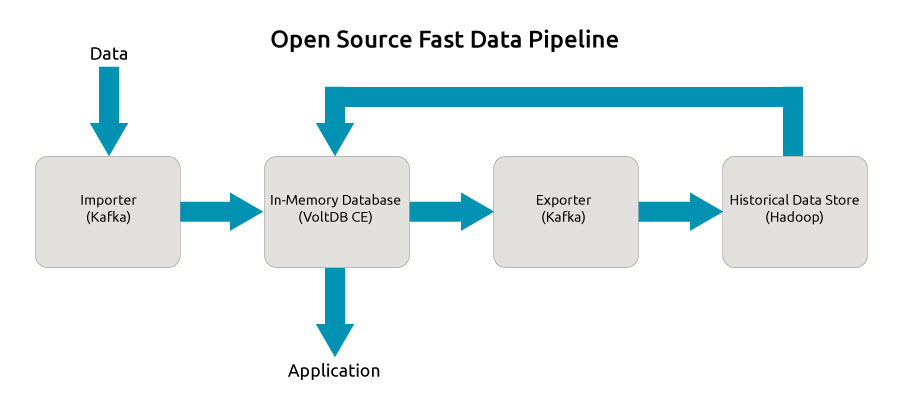

The next question for these enterprises is “how do we effectively put these big data insights into action in real time?” There are not many good open source solutions that solve this need at both the required scale and latency. One approach to this problem is to build a fast-data pipeline.

Data enters through an importer, such as Kafka. Then, it goes to an in-memory operational database, such as Volt Active Data Community Edition, which powers the application. Next, the data is exported (in this example, through Kafka again) to a historical data store, where key insights are generated. Those insights are fed back to the operational database, where they are used to inform and drive the application. By using a truly open source fast-data pipeline like the this example, you can avoid vendor lock-in and drive your applications with real-time insights.

Driving Additional Business

As customers choose to only do business with companies that keep up with them in real-time, enterprises are taking a hard look at how to use cutting edge technology to create a sustained competitive differentiation in the market. The fast data pipeline is an effective way to not just stay on top of large streams Mobile, User and IoT data but also using it effectively by making in-the-moment decisions to drive additional business.